Software Vision Sensor

The first scalable camera-based

software for the future of mobility.

We increase the autonomy of mobile robots and machine operators by providing the visual common sense they need to navigate.

What we offer

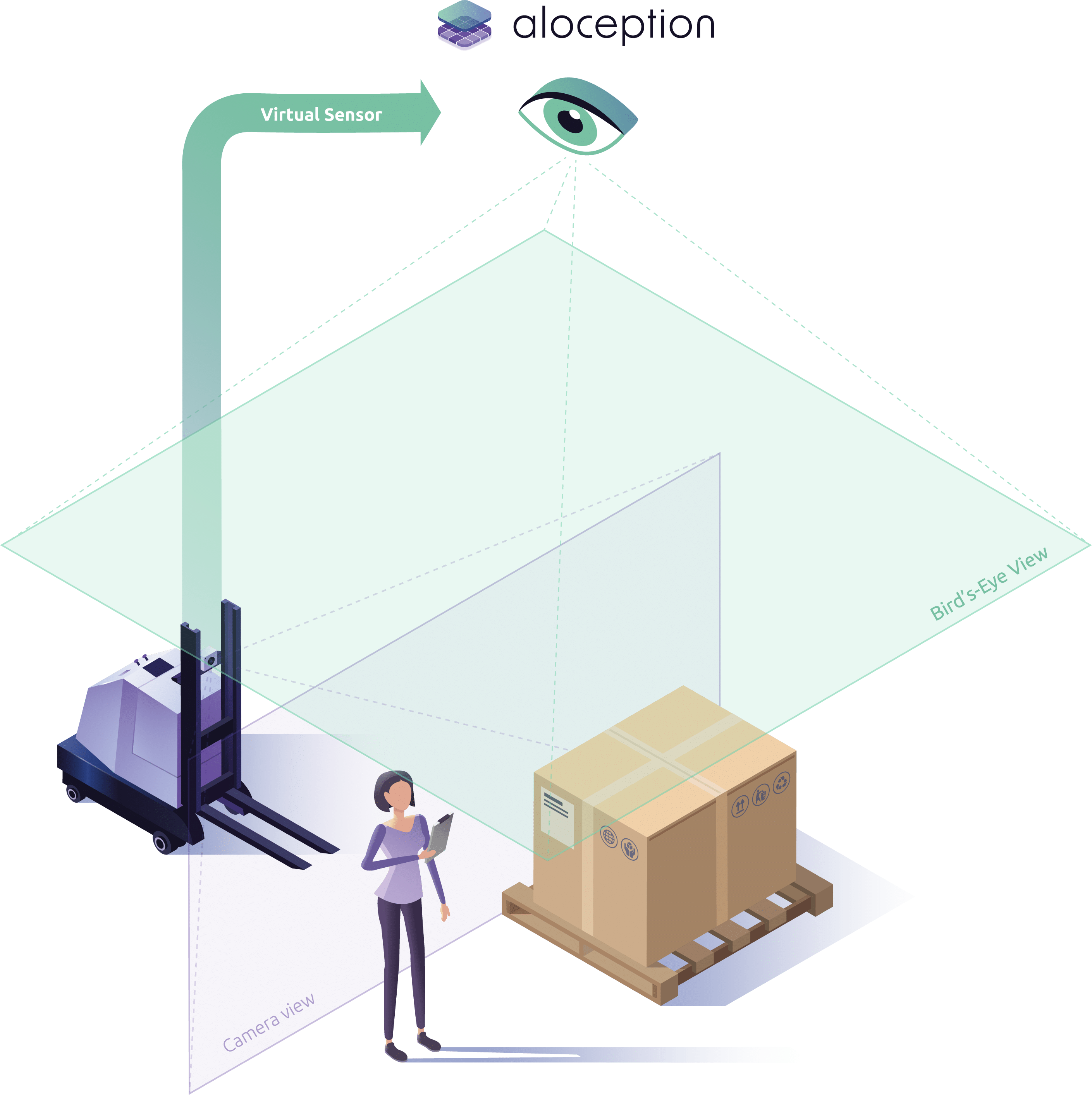

Aloception Bird’s-Eye View

While traditional cameras only see what is in front of them, Aloception Bird’s-Eye View provides a single yet complete and enriched representation of a scene.

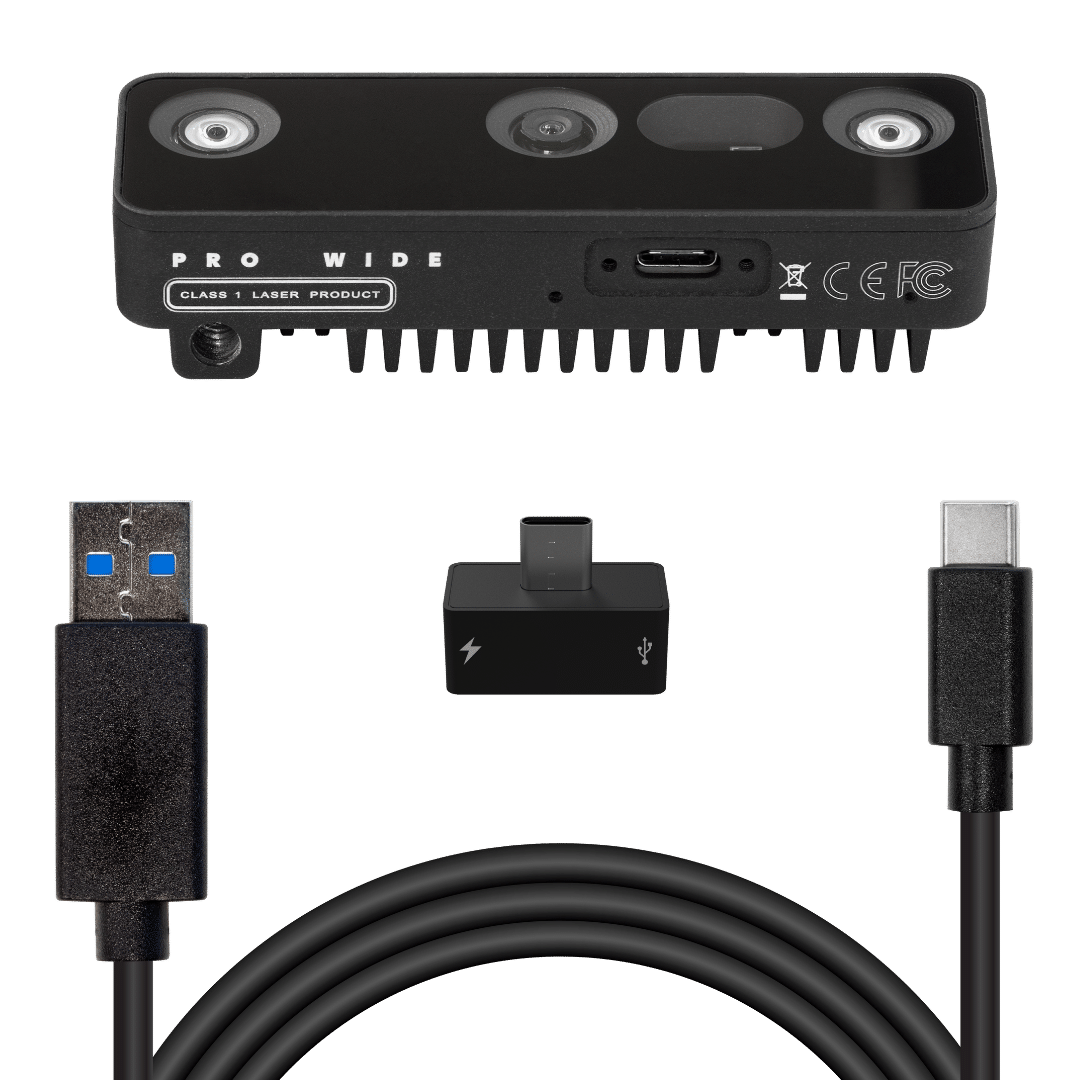

Discover Aloception with the ADK

The ADK is a Plug & Play software vision sensor, available for you to test Aloception on your own use cases.

We stand where Observation is key

WHO WE SERVE

The future of Mobility

Genericity enables adaptability. With the same core technology and a cross-functional strategy, Aloception helps to shape the Future of Mobility by addressing two major markets.

Autonomous Mobility

Aloception offers a set of high-value information directly usable by the robotics manufacturers in targeted end-user tasks.

Robot manufacturers can maximize robot ROI through higher availability rate thanks to early detection of deadlock situations.

Assisted Mobility

Aloception is designed to help heavy vehicles drivers and machine operators have an enhanced comprehension of their surrounding.

Operators can reduce the number of dangerous situations encounter, while increasing their comfort of use.

How we do it

Children walk before they talk

Like a child who knows how to navigate around a chair and a dog before knowing their names, Aloception focuses on the depth and geometric understanding of the environment rather than the semantic aspect of things.

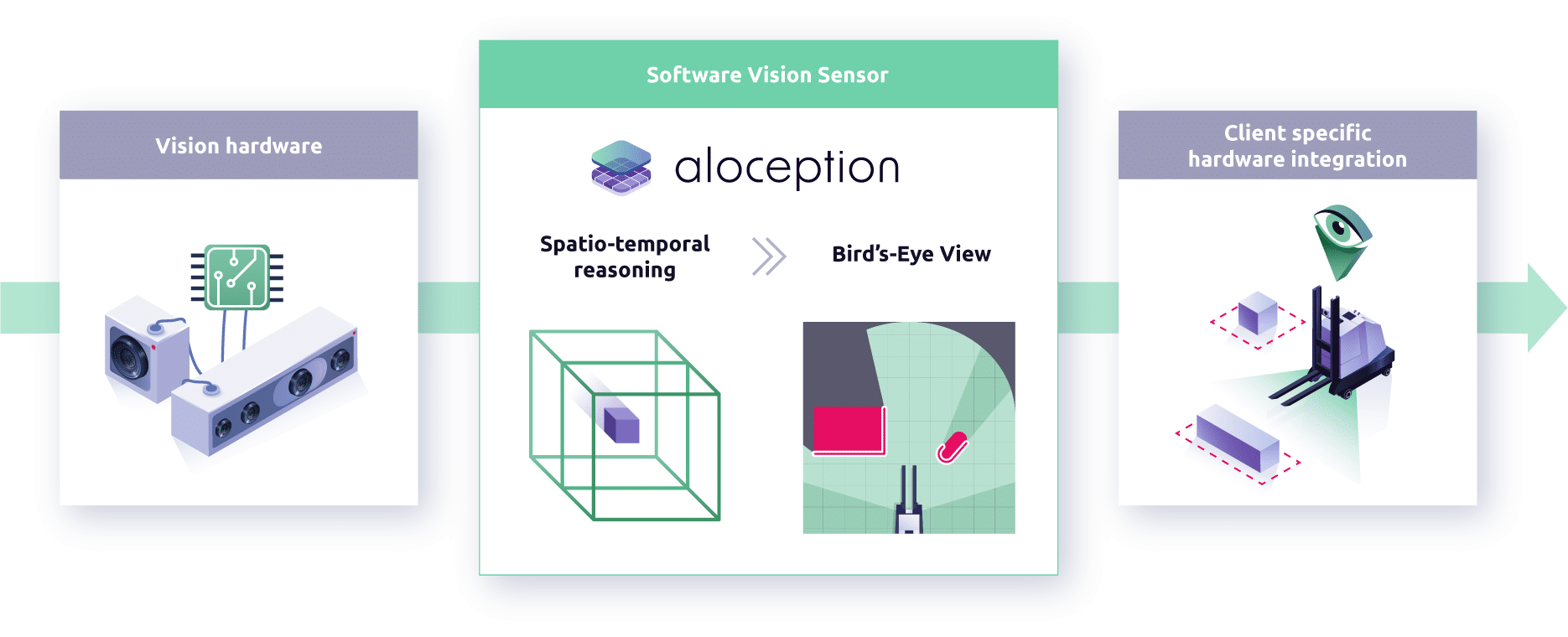

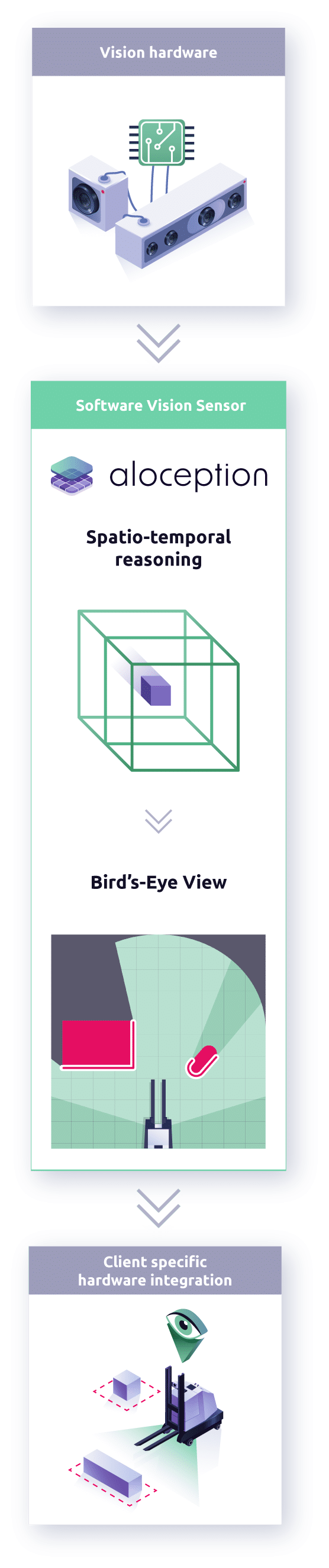

Camera-based only

Monocular, stereo, fisheye

Unified architecture

Automatic sensor calibration and fusion

Spatio-temporal reasoning

Time and geometric analysis for more robustness

Lightweight real-time understanding

Embedded system and recurrent formulation

Humans navigate with their eyes, the rest happens in the brain.

We focus on the brain, not the extra sensors.

Who we are

Ambitious & Creative team

Founded in 2020 by Rémi Agier and Thibault Neveu, Visual Behavior now counts 15 creative and passionate people focused on solving observation problems for robotics.

Based on our extensive experience in cognitive science, computer vision and robotics engineering, we aim to help smart mobility companies by providing a scalable and affordable scene understanding solution.

Rémi AGIER

CEO & Co-founder

Thibault NEVEU

CTO & Co-founder

Visual Behavior is supported by national and regional institutions