Aloception Bird’s-Eye View

Smart top-down vision

for autonomous navigation & driving assistance systems

Discover the first software vision sensor

Essential information for smart navigation

Like an artificial visual cortex, our core technology is composed of modules performing specific cognitive functions.

Their interaction enriches scene understanding by generating a set of fundamental outputs for smart navigation.

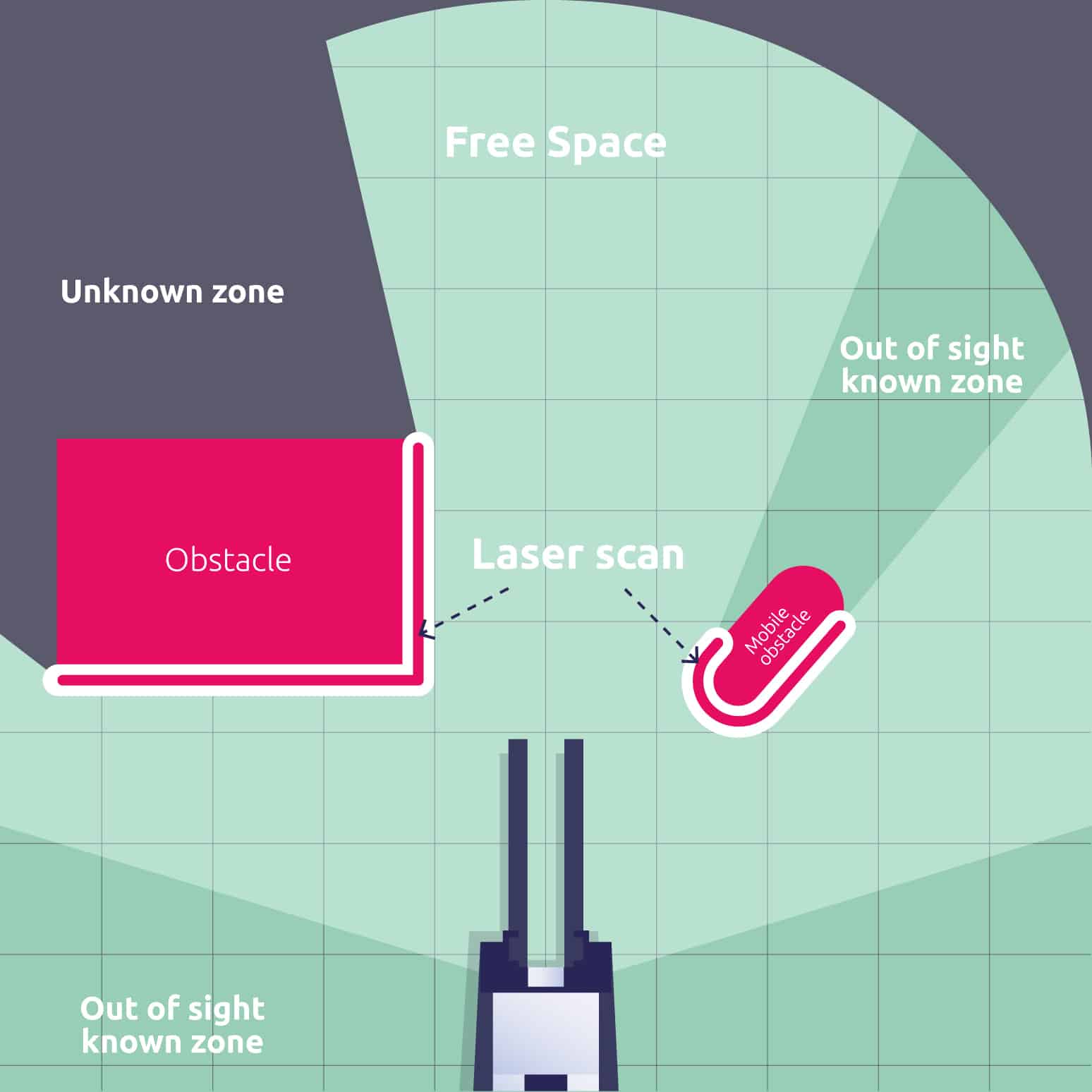

Freespace

Depth map

Obstacle detection

People detection

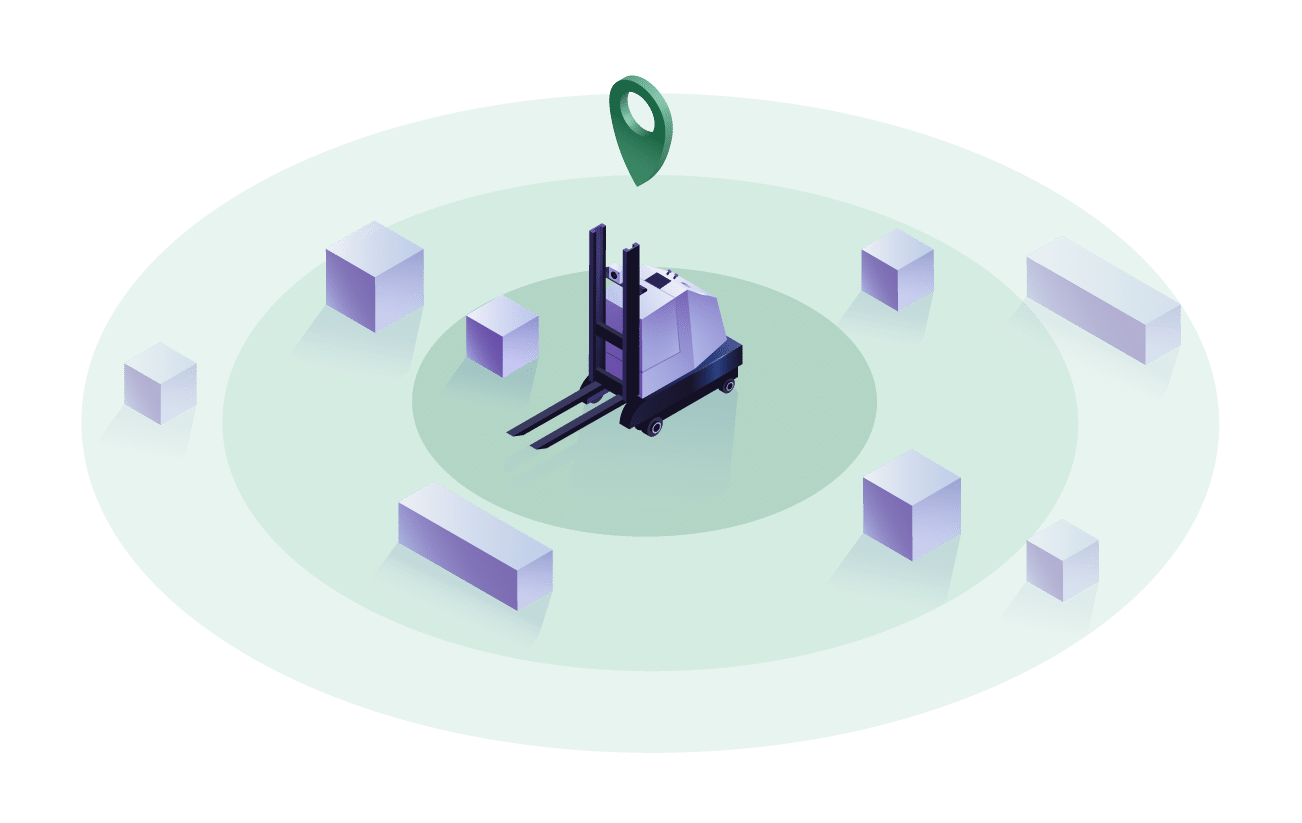

The all-in-one output

All essential and generic information given as outputs are merged into one single view : the Bird’s-Eye View (BEV).

This representation is an enriched and ready-to-use final output. It offers a smart and practical visual scene understanding.

Discover the capabilities of Aloception in various environments

Smart understanding for higher adaptability

Thanks to complex geometric representation and understanding of the physical properties of the elements of a scene, Aloception opens up new fields of possibility for autonomous and assisted mobility, such as adaptation to unstructured and unfamiliar environments.

Better depth estimation

Better trajectory estimation

Better obstacle detection

A new approach, based on next-generation models

Visual Foundation Model (VFM)

Our technology leverages the latest advancements in foundation models, specifically focusing on image-based models rather than text-based NLP models.

This model is designed to understand the entire scene, both semantically and geometrically. It operates solely on color images, achieving comprehensive 3D scene understanding—akin to human perception from a single photograph.

This unique approach gives us a head start in scene interpretation, far surpassing traditional methods.

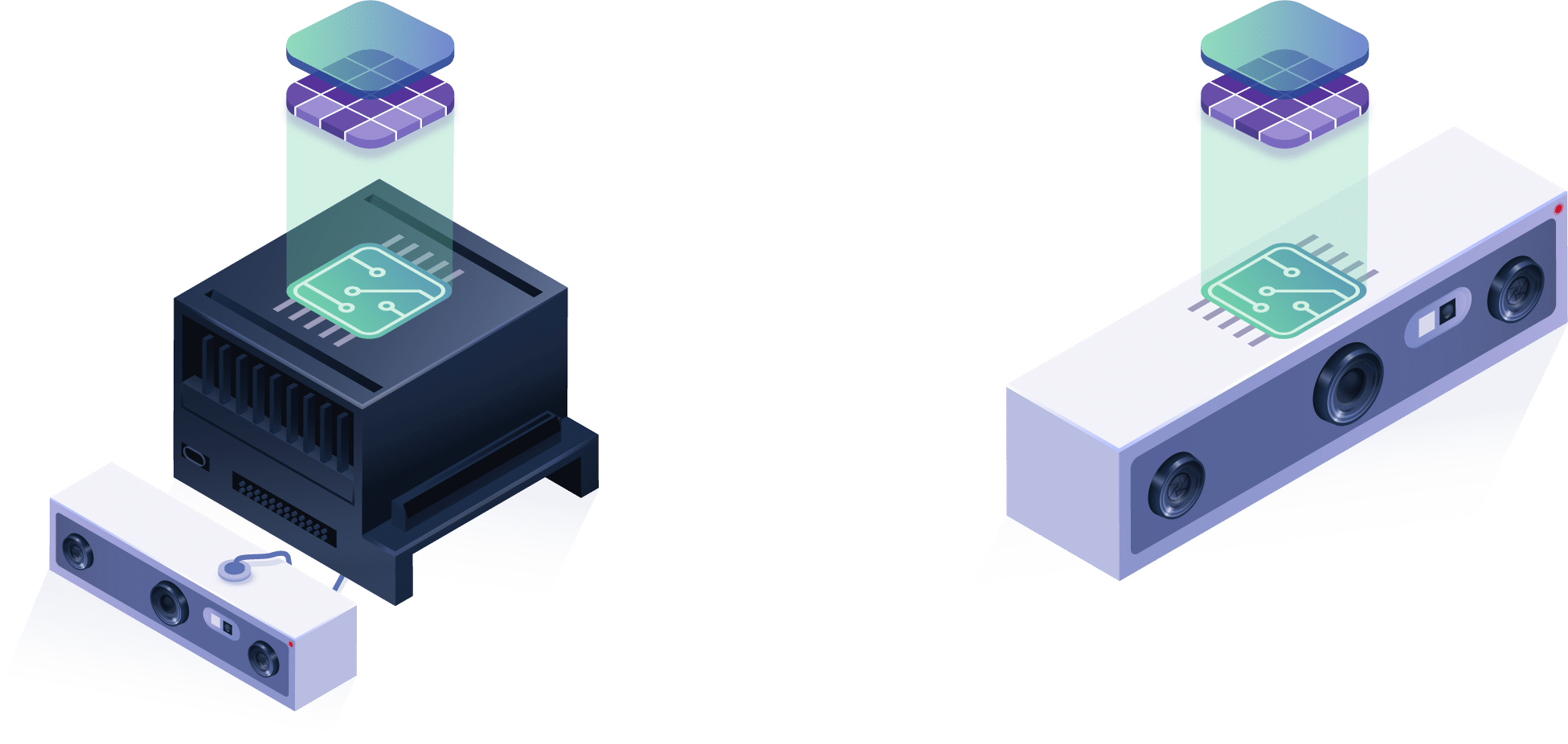

Distillation to Edge

Foundation models are notorious for their computational demands. To enable real-time operation on embedded systems, we have optimized model distillation to the extreme.

Our VFM serves as the teacher model and we reintegrate hardware-specific data like dual-camera streams, Time-of-Flight (ToF) depth, and structured light. The result is a lightweight yet powerful network that efficiently uses both geometric and semantic cues from the VFM and the sensors.

This approach reduces computing requirements by 100 times, enabling real-time operation even on low-power devices.

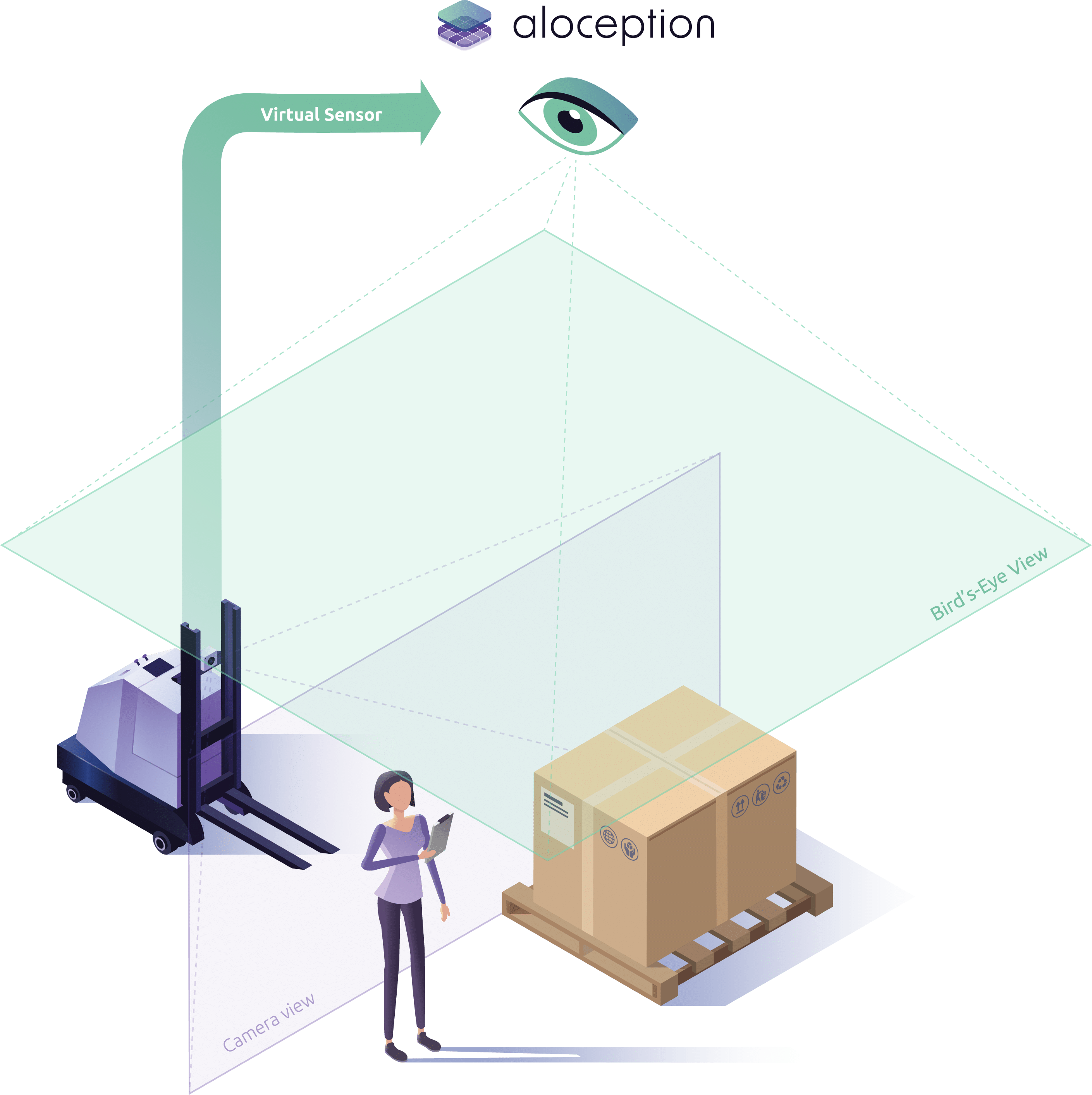

Bird’s-Eye View (BEV)

In our technology, sensor data is mapped directly to a bird’s-eye view (BEV) within the software, a feature we have integrated natively.

This method significantly improves performance, which has been proven in self-driving cars and is now being extended to a range of robots.

Interestingly, image-based sensors that are very good at depth perception or object detection do not always translate into effective BEV representation. By designing our technology to focus on bird’s-eye view, we maximize the real-world performance of mobile robots of all types.

Discover the compatible hardware

Depending on use-case constraints or specifications, Aloception can be based on different cameras and integrated into different hardware configurations.